Rana Gujral, CEO of Behavioral Signals, discusses the future of NLP and sentiment analysis to improve customer service

- Af

- Episode

- 140

- Published

- 9. okt. 2022

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 140 of 327

- Længde

- 41M

- Sprog

- Engelsk

- Format

- Kategori

- Økonomi & Business

Send us a text

Rana Gujral, CEO of Behavioral Signals since 2018, joined the company after a distinguished tech career growing companies like Logitech, TiZE, and Cricut. Behavioral Signals uses emotion and behavioral science to help contact center agents deliver better service. Rana and the team are on a mission to improve customer interactions by using signals other than the spoken word to understand exactly what they need based on indicators like voice tone and pitch.

Listen and learn...

1. How to train AI models on past service interactions and outcomes to determine which agents should speak to which customers 2. How to use deep learning and NLP to process non-speech behavior signals like intonation, pitch, and tonal variance 3. How behavior signals can be used to predict stress, duress, and propensity to buy or pay 4. How to achieve high levels of prediction accuracy without processing "the spoken word" 5. Why tone and pitch are better indicators of sentiment than actual words across any language 6. How to compete with Google/Microsoft/Amazon for data when building an AI-first conversational intelligence product 7. The biggest opportunity Rana sees to use AI to help humans live better lives

References in this episode:

Mahesh Ram from Solvvy • (now Zoom) on AI and the Future of Work Gadi Shamia from Replicant • on AI and the Future of Work How personalization algorithms work in your social feedsBehavioral Signals

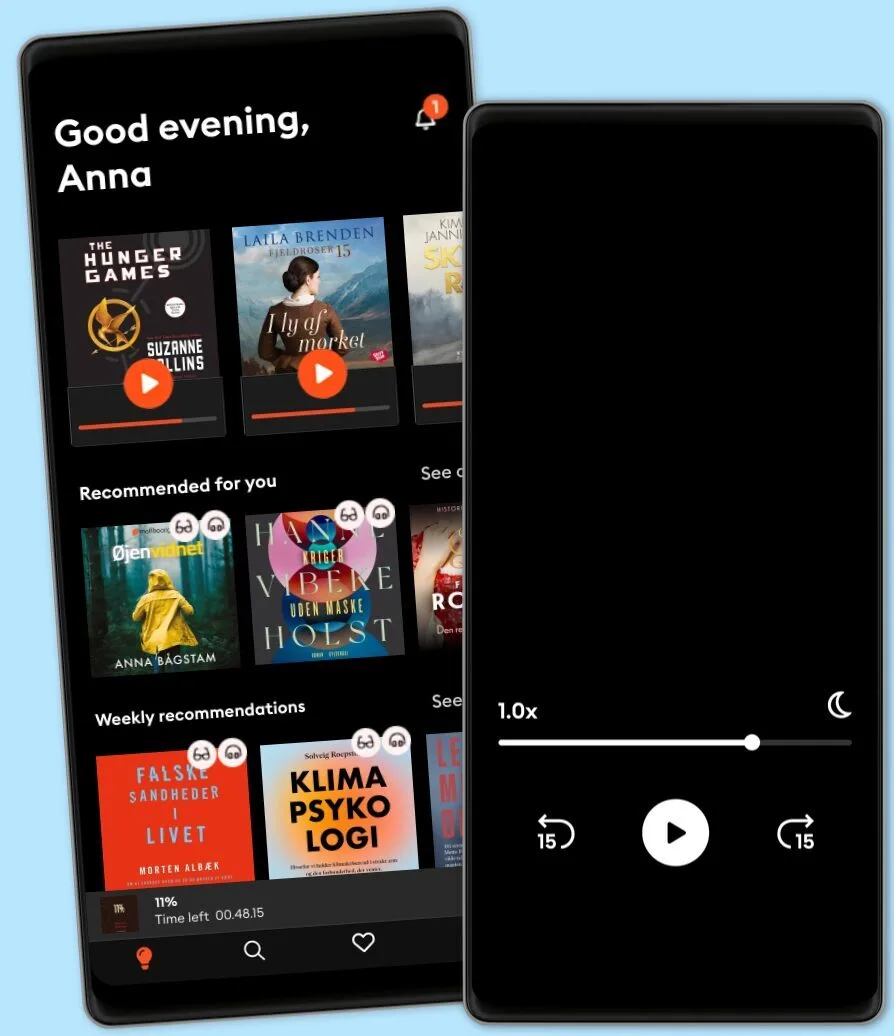

Other podcasts you might like ...

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd