Safeguarding Against Malicious Use of Large Language Models: A Review of the OWASP Top 10 for LLMs | A Conversation with Jason Haddix | Redefining CyberSecurity with Sean Martin

- Af

- Episode

- 189

- Published

- 14. jun. 2023

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 189 of 591

- Længde

- 51M

- Sprog

- Engelsk

- Format

- Kategori

- Økonomi & Business

Guest: Jason Haddix, CISO and Hacker in Charge at BuddoBot Inc [@BuddoBot]

On LinkedIn | https://www.linkedin.com/in/jhaddix/

On Twitter | https://twitter.com/Jhaddix

____________________________

Host: Sean Martin, Co-Founder at ITSPmagazine [@ITSPmagazine] and Host of Redefining CyberSecurity Podcast [@RedefiningCyber]

On ITSPmagazine | https://www.itspmagazine.com/itspmagazine-podcast-radio-hosts/sean-martin ____________________________

This Episode’s Sponsors

Imperva | https://itspm.ag/imperva277117988

Pentera | https://itspm.ag/penteri67a

___________________________

Episode Notes

In this Redefining CyberSecurity Podcast, we provide an in-depth exploration of the potential implications of large language models (LLMs) and artificial intelligence in the cybersecurity landscape. Jason Haddix, a renowned expert in offensive security, shares his perspective on the evolving risks and opportunities that these new technologies bring to businesses and individuals alike. Sean and Jason explore the potential risks of using LLMs:

🚀 Prompt Injections 💧 Data Leakage 🏖️ Inadequate Sandboxing 📜 Unauthorized Code Execution 🌐 SSRF Vulnerabilities ⚖️ Overreliance on LLM-generated Content 🧭 Inadequate AI Alignment 🚫 Insufficient Access Controls ⚠️ Improper Error Handling 💀 Training Data Poisoning

From the standpoint of offensive security, Haddix emphasizes the potential for LLMs to create an entirely new world of capabilities, even for non-expert users. He envisages a near future where AI, trained on diverse datasets like OCR and image recognition data, can answer private queries about individuals based on their public social media activity. This potential, however, isn't limited to individuals - businesses are equally at risk.

According to Haddix, businesses worldwide are rushing to leverage proprietary data they've collected in order to generate profits. They envision using LLMs, such as GPT, to ask intelligent questions of their data that could inform decisions and fuel growth. This has given rise to the development of numerous APIs, many of which are integrated with LLMs to produce their output.

However, Haddix warns of the vulnerabilities this widespread use of LLMs might present. With each integration and layer of connectivity, opportunities for prompt injection attacks increase, with attackers aiming to exploit these interfaces to steal data. He also points out that the very data a company uses to train its LLM might be subject to theft, with hackers potentially able to smuggle out sensitive data through natural language interactions.

Another concern Haddix raises is the interconnected nature of these systems, as companies link their LLMs to applications like Slack and Salesforce. The connections intended for data ingestion or query could also be exploited for nefarious ends. Data leakage, a potential issue when implementing LLMs, opens multiple avenues for attacks.

Sean Martin, the podcast's host, echoes Haddix's concerns, imagining scenarios where private data could be leveraged and manipulated. He notes that even benign-seeming interactions, such as conversing with a bot on a site like Etsy about jacket preferences, could potentially expose a wealth of private data.

Haddix also warns of the potential to game these systems, using the Etsy example to illustrate potential data extraction, including earnings of sellers or even their private location information. He likens the data leakage possibilities in the world of LLMs to the potential dangers of SQL injection in the web world. In conclusion, Haddix emphasizes the need to understand and safeguard against these risks, lest organizations inadvertently expose themselves to attack via their own LLMs.

All OWASP Top 10 items are reviewed, along with a few other valuable resources (listed below).

We hope you enjoy this conversation!

____________________________

Watch this and other videos on ITSPmagazine's YouTube Channel

Redefining CyberSecurity Podcast with Sean Martin, CISSP playlist:

📺 https://www.youtube.com/playlist?list=PLnYu0psdcllS9aVGdiakVss9u7xgYDKYq

ITSPmagazine YouTube Channel:

📺 https://www.youtube.com/@itspmagazine

Be sure to share and subscribe!

____________________________

Resources

The inspiring Tweet: https://twitter.com/Jhaddix/status/1661477215194816513

Announcing the OWASP Top 10 for Large Language Models (AI) Project (Steve Wilson): https://www.linkedin.com/pulse/announcing-owasp-top-10-large-language-models-ai-project-steve-wilson/

OWASP Top 10 List for Large Language Models Descriptions: https://owasp.org/www-project-top-10-for-large-language-model-applications/descriptions/

Daniel Miessler Blog: The AI attack Surface Map 1.0: https://danielmiessler.com/p/the-ai-attack-surface-map-v1-0/

PODCAST: Navigating the AI Security Frontier: Balancing Innovation and Cybersecurity | ITSPmagazine Event Coverage: RSAC 2023 San Francisco, USA | A Conversation about AI security and MITRE Atlas with Dr. Christina Liaghati: https://itsprad.io/redefining-cybersecurity-163

Learn more about MITRE Atlas: https://atlas.mitre.org/

MITRE Atlas on Slack (invitation): https://join.slack.com/t/mitreatlas/shared_invite/zt-10i6ka9xw-~dc70mXWrlbN9dfFNKyyzQ

Gandalf AI Playground: https://gandalf.lakera.ai/

____________________________

To see and hear more Redefining CyberSecurity content on ITSPmagazine, visit:

https://www.itspmagazine.com/redefining-cybersecurity-podcast

Are you interested in sponsoring an ITSPmagazine Channel?

👉 https://www.itspmagazine.com/sponsor-the-itspmagazine-podcast-network

Hosted by Simplecast, an AdsWizz company. See pcm.adswizz.com for information about our collection and use of personal data for advertising.

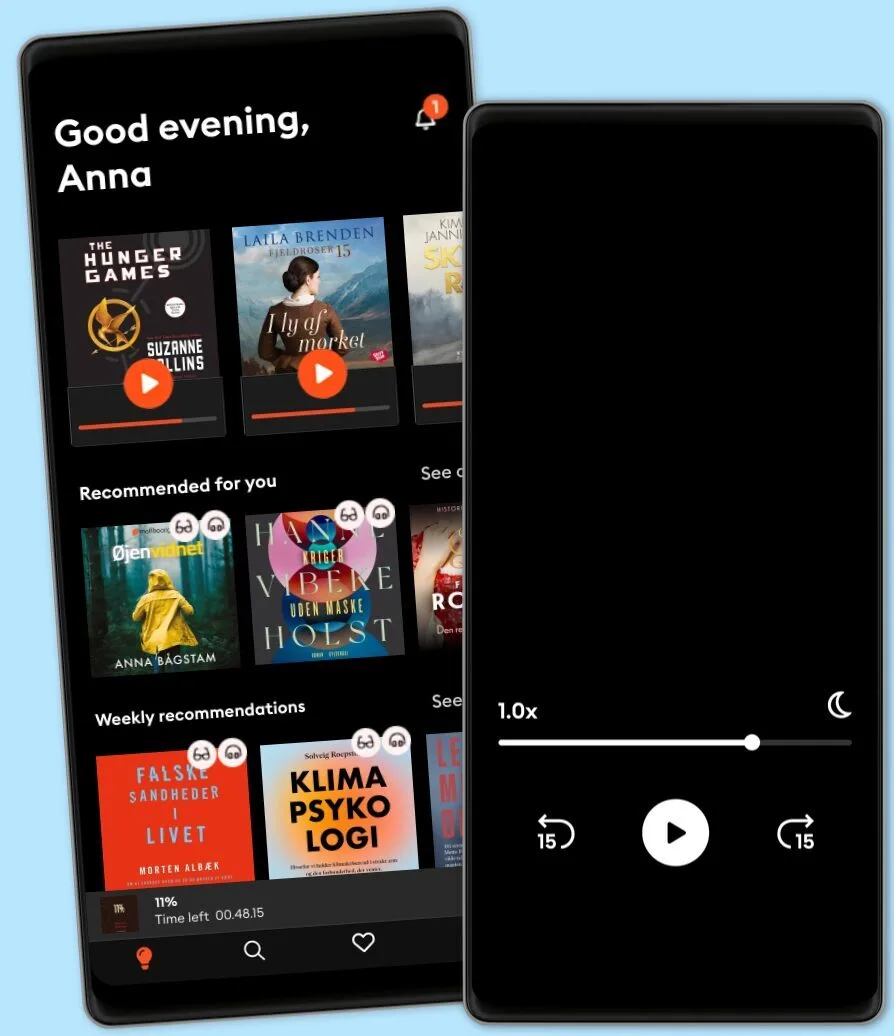

Lyt når som helst, hvor som helst

Nyd den ubegrænsede adgang til tusindvis af spændende e- og lydbøger - helt gratis

- Lyt og læs så meget du har lyst til

- Opdag et kæmpe bibliotek fyldt med fortællinger

- Eksklusive titler + Mofibo Originals

- Opsig når som helst

Other podcasts you might like ...

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd

- SvD LedarredaktionenSvenska Dagbladet

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd

- SvD LedarredaktionenSvenska Dagbladet

Dansk

Danmark