E33: The Tiny Model Revolution with Ronen Eldan and Yuanzhi Li of Microsoft Research

- Af

- Episode

- 33

- Published

- 6. jun. 2023

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 33 of 327

- Længde

- 1T 59M

- Sprog

- Engelsk

- Format

- Kategori

- Økonomi & Business

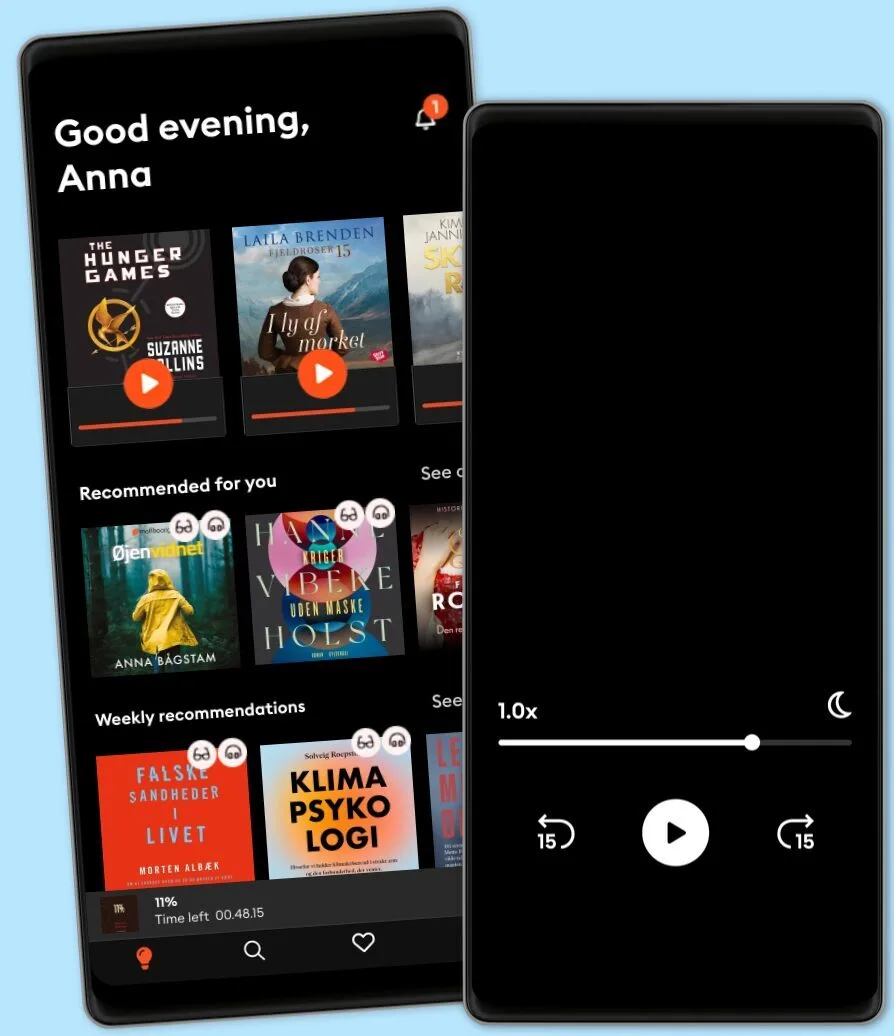

Nathan Labenz sits down with Ronen Eldan and Yuanzhi Li of Microsoft Research to discuss the small natural language dataset they created called TinyStories. Tiny Stories is designed to reflect the full richness of natural language while still being small to support research with modest compute budgets. Using this dataset, they began to explore aspects of language model performance, behavior, and mechanism by training a series of models that range in size from just 1 million to a maximum of 33 million parameters – which is still just 2% the scale of GPT-2. In this conversation, Nathan, Ronen, and Yuanzhi touch on LM reasoning, emergence, interpretability, and what understanding can be extended to LLMs.

RECOMMENDED PODCAST: The HR industry is at a crossroads. What will it take to construct the next generation of incredible businesses – and where can people leaders have the most business impact? Hosts Nolan Church and Kelli Dragovich have been through it all, the highs and the lows – IPOs, layoffs, executive turnover, board meetings, culture changes, and more. With a lineup of industry vets and experts, Nolan and Kelli break down the nitty-gritty details, trade offs, and dynamics of constructing high performing companies. Through unfiltered conversations that can only happen between seasoned practitioners, Kelli and Nolan dive deep into the kind of leadership-level strategy that often happens behind closed doors. Check out the first episode with the architect of Netflix’s culture deck Patty McCord. https://link.chtbl.com/hrheretics

LINKS: Tiny Stories paper: https://huggingface.co/papers/2305.07759

TIMESTAMPS: (00:00) Episode Preview (07:12) The inspiration for the Tiny Stories project (15:07) Sponsor: Omneky (15:44) Creating the Tiny Stories dataset (21:27) GPT-4 vs GPT-3.5 (24:13) Did the TinyStories team try any other versions of GPT-4 (29:23) Curriculum models and weirder curriculums (35:34) What does reasoning mean? (46:27) What does emergence mean? (01:01:44) The curriculum development space (01:11:40) The similarities between models and human development (01:20:12) Fewer layers vs. more layers (01:29:22) Attention heads (01:33:40) Semantic attention head (01:36:54) Neuron technique used in developing the TinyStories model (01:52:20) Interpretability work that inspires Ronen and Yuanzhi

TWITTER: @CogRev_Podcast @EldanRonen (Ronen) @labenz (Nathan) @eriktorenberg (Erik)

SPONSORS: Shopify is the global commerce platform that helps you sell at every stage of your business. Shopify powers 10% of ALL eCommerce in the US. And Shopify's the global force behind Allbirds, Rothy's, and Brooklinen, and 1,000,000s of other entrepreneurs across 175 countries.From their all-in-one e-commerce platform, to their in-person POS system – wherever and whatever you're selling, Shopify's got you covered. With free Shopify Magic, sell more with less effort by whipping up captivating content that converts – from blog posts to product descriptions using AI. Sign up for $1/month trial period: https://shopify.com/cognitive

Thank you Omneky for sponsoring The Cognitive Revolution. Omneky is an omnichannel creative generation platform that lets you launch hundreds of thousands of ad iterations that actually work, customized across all platforms, with a click of a button. Omneky combines generative AI and real-time advertising data. Mention "Cog Rev" for 10% off. This show is produced by Turpentine: a network of podcasts, newsletters, and more, covering technology, business, and culture — all from the perspective of industry insiders and experts. We’re launching new shows every week, and we’re looking for industry-leading sponsors — if you think that might be you and your company, email us at erik@turpentine.co. Music Credit: MusicLM More show notes and reading material released in our Substack: https://cognitiverevolution.substack.com

Other podcasts you might like ...

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Self-Compassionate ProfessorPhD

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Self-Compassionate ProfessorPhD

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog