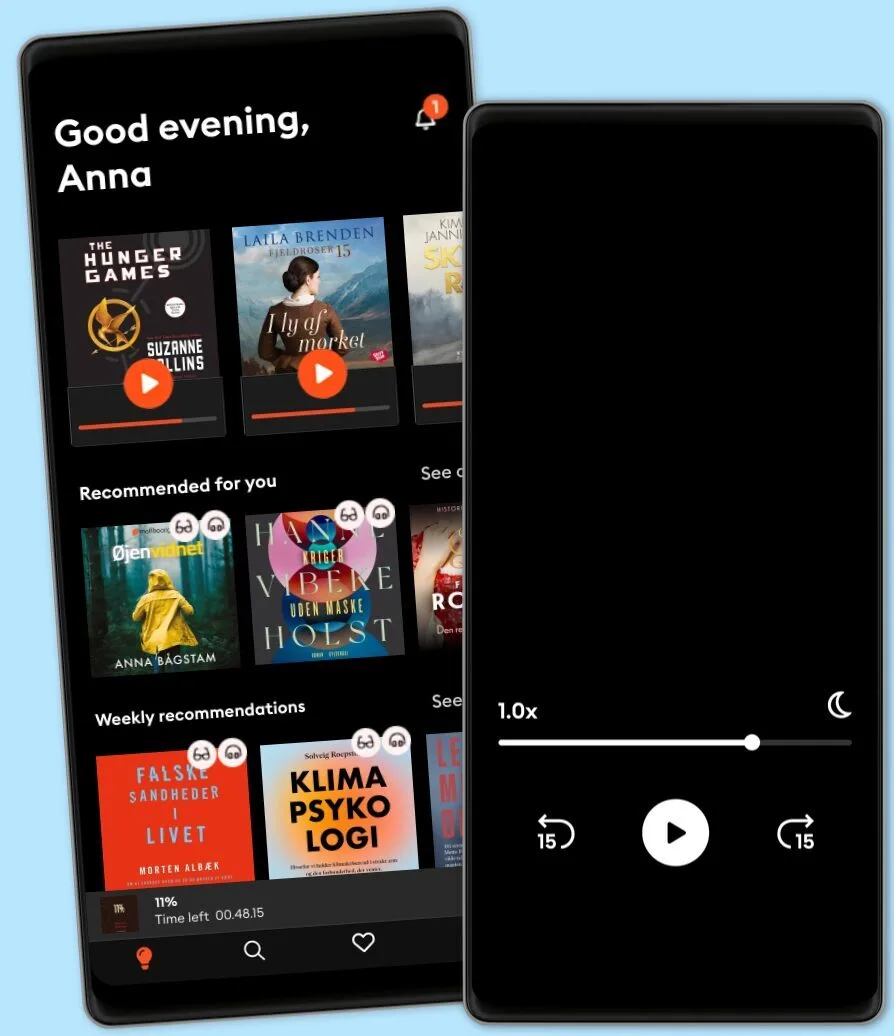

AI for everyone

- Af

- Episode

- 7

- Published

- 3. sep. 2019

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 7 of 46

- Længde

- 29M

- Sprog

- Engelsk

- Format

- Kategori

- Personlig udvikling

While there is a lot of excitement about AI research, there are also concerns about the way it might be implemented, used and abused. In this episode Hannah investigates the more human side of the technology, some ethical issues around how it is developed and used, and the efforts to create a future of AI that works for everyone.

If you have a question or feedback on the series, message us on Twitter (@DeepMind using the hashtag #DMpodcast) or email us at podcast@deepmind.com.

Further reading:

The Partnership on AIProPublica: investigation into machine bias in criminal sentencingScience Museum – free exhibition: Driverless: who is in control (until Oct 2020)Survival of the best fit: An interactive game that demonstrates some of the ways in which bias can be introduced into AI systems, in this case for hiringJoy Buolamwini: AI, Ain’t I a Woman: A spoken word piece exploring AI bias, and systems not recognising prominent black womenHannah Fry: Hello World - How to be Human in the Age of the MachineDeepMind: Safety and EthicsFuture of Humanity Institute: AI Governance:A Research Agenda Interviewees: Verity Harding, Co-Lead of DeepMind Ethics and Society; DeepMind’s COO Lila Ibrahim, and research scientists William Isaac and Silvia Chiappa.

Credits: Presenter: Hannah Fry Editor: David Prest Senior Producer: Louisa Field Producers: Amy Racs, Dan Hardoon Binaural Sound: Lucinda Mason-Brown Music composition: Eleni Shaw (with help from Sander Dieleman and WaveNet) Commissioned by DeepMind

Please leave us a review on Spotify or Apple Podcasts if you enjoyed this episode. We always want to hear from our audience whether that's in the form of feedback, new idea or a guest recommendation!

Hosted by Simplecast, an AdsWizz company. See pcm.adswizz.com for information about our collection and use of personal data for advertising.

Other podcasts you might like ...

- Ask a ScientistScience Journal for Kids

- Story Of LanguagesSnovel Creations

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- Intrecci - L’arte delle relazioni Ameya Gabriella Canovi

- Chillin' with ICECloud10

- Minimal-ish: Minimalism, Intentional Living, MotherhoodCloud10

- Talk To Me In KoreanTTMIK

- Ask a ScientistScience Journal for Kids

- Story Of LanguagesSnovel Creations

- Rise With ZubinRise With Zubin

- Quint Fit EpisodesQuint Fit

- 'I AM THAT' by Ekta BathijaEkta Bathija

- Eat Smart With AvantiiAvantii Deshpande

- Intrecci - L’arte delle relazioni Ameya Gabriella Canovi

- Chillin' with ICECloud10

- Minimal-ish: Minimalism, Intentional Living, MotherhoodCloud10

- Talk To Me In KoreanTTMIK