X Corp.'s Content Moderation Practices and Challenges.

- Af

- Episode

- 591

- Published

- 25. aug. 2025

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 591 of 793

- Længde

- 47M

- Sprog

- Engelsk

- Format

- Kategori

- True Crime

This research offers an expert analysis of X's (formerly Twitter's) account suspension and moderation practices, highlighting a significant disconnect between its stated commitment to "free speech" and the platform's often opaque and inconsistent enforcement of rules. It outlines how automated systems primarily handle suspensions, which are effective against spam but lead to a lack of due process for individual users due to limited human support and automated appeal rejections. The analysis details various reasons for suspension, ranging from technical "spam" violations to more severe infractions like hate speech, and explains the different tiers of penalties users may face. Ultimately, the source critically examines the fairness, transparency, and effectiveness of X's moderation, suggesting that its post-2022 operational changes, including reduced human staff, have eroded user trust and driven many to seek alternative, often decentralized, platforms offering more transparent and community-driven governance models.

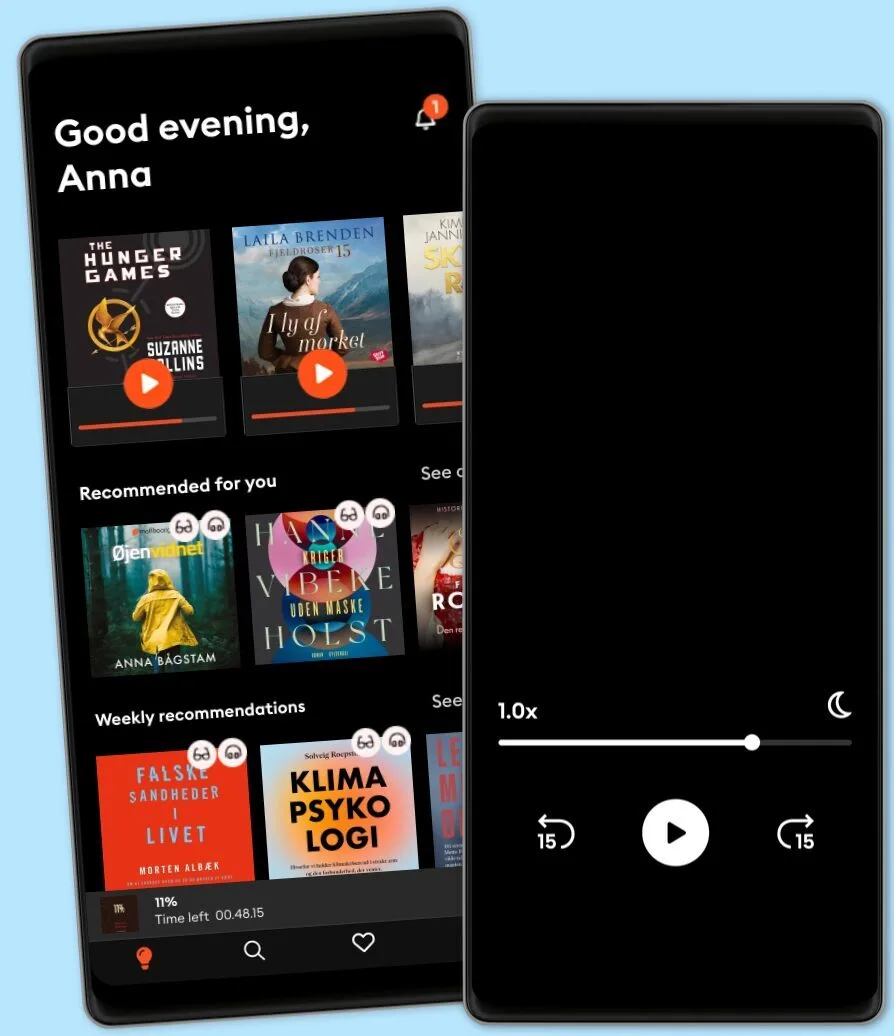

Lyt når som helst, hvor som helst

Nyd den ubegrænsede adgang til tusindvis af spændende e- og lydbøger - helt gratis

- Lyt og læs så meget du har lyst til

- Opdag et kæmpe bibliotek fyldt med fortællinger

- Eksklusive titler + Mofibo Originals

- Opsig når som helst

Other podcasts you might like ...

- Il Gigante Buono, e altre bugie che ci raccontiamoOrange Podcast

- En amerikansk epidemiPatrick Stanelius

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

- HelikopterpilotenVictoria Rinkous

- HQ-skandalen: Den stora bankhärvanVictoria Rinkous

- Serieskytten Peter MangsAnton Vretander

- Hemtjänstmaffian - en svensk vårdskandalEmma Ikekwe

- JärvakonfliktenCarl Haeger

- Gola, vittna, döAmanda Leander

- Beyond All RepairWBUR

- Il Gigante Buono, e altre bugie che ci raccontiamoOrange Podcast

- En amerikansk epidemiPatrick Stanelius

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

- HelikopterpilotenVictoria Rinkous

- HQ-skandalen: Den stora bankhärvanVictoria Rinkous

- Serieskytten Peter MangsAnton Vretander

- Hemtjänstmaffian - en svensk vårdskandalEmma Ikekwe

- JärvakonfliktenCarl Haeger

- Gola, vittna, döAmanda Leander

- Beyond All RepairWBUR

Dansk

Danmark