Jonathan Frankle, Harvard Professor and MosaicML Chief Scientist, discusses the past, present, and future of deep learning

- Af

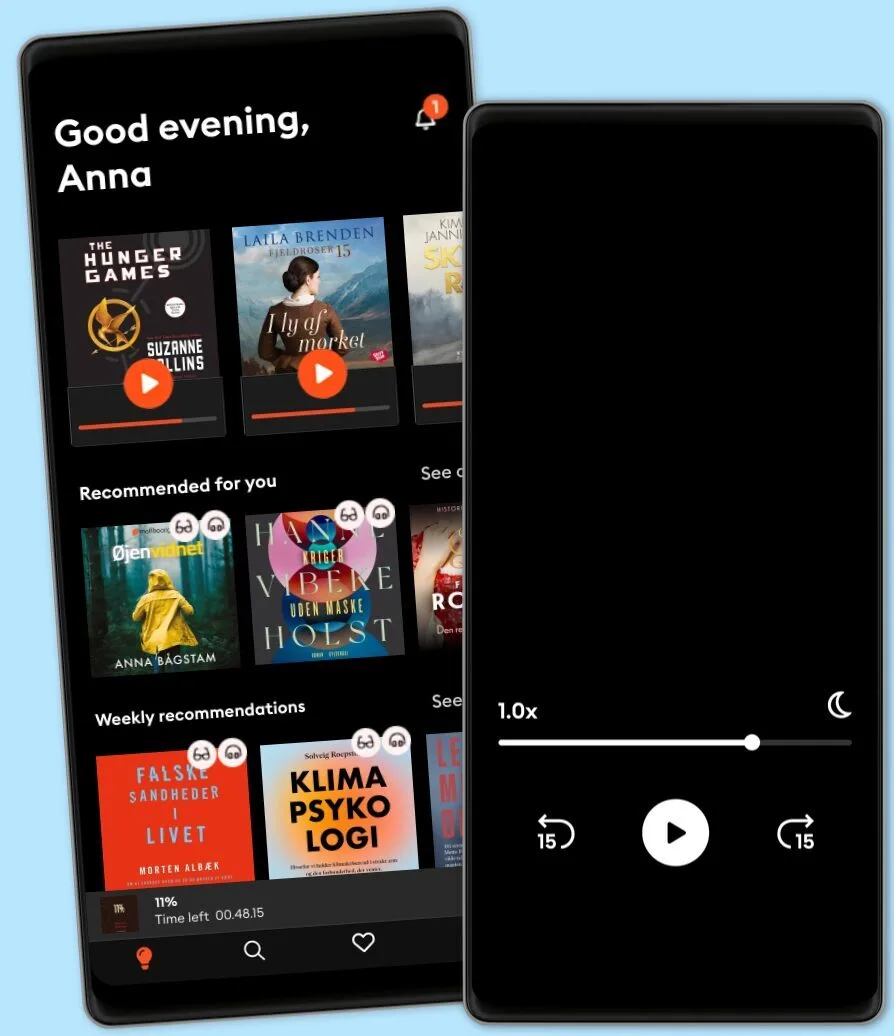

- Episode

- 144

- Published

- 6. nov. 2022

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 144 of 327

- Længde

- 39M

- Sprog

- Engelsk

- Format

- Kategori

- Økonomi & Business

Send us a text

Jonathan Frankle, incoming Harvard Professor and Chief Scientist at MosaicML, is focused on reducing the cost of training neural nets. He received his PhD at MIT and his BSE and MSE from Princeton.

Jonathan has also been instrumental in shaping technology policy related to AI. He worked on a landmark facial recognition report while working as a Staff Technologist at the Center on Privacy and Technology at Georgetown Law.

Thanks to great guest Hina Dixit from Samsung NEXT for the introduction to Jonathan!

Listen and learn...

1. Why we can't understand deep neural nets like we can understand biology or physics. 2. Jonathan's "lottery hypothesis" that neural nets are 50-90% bigger than they need to be...but it's hard to find which parts aren't necessary. 3. How researchers are finding ways to reduce the cost and complexity of training neural nets. 4. Why we shouldn't expect another AI winter because "it's now a fundamental substrate of research". 5. Which AI problems are a good fit for deep learning... and which ones aren't. 6. What's the role for regulation in enforcing responsible use of AI. 7. How Jonathan and his CTO Hanlin Tang 8. at MosaicML create a culture that fosters responsible use of AI. 9. Why Jonathan says "...We're building a ladder to the moon if we think today's neural nets will lead to AGI."

References in this episode...

The AI Bill of RightsMosaicMLJonathan's personal site

Other podcasts you might like ...

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd

- The Journal.The Wall Street Journal & Spotify Studios

- The Can Do WayTheCanDoWay

- 1,5 graderAndreas Bäckäng

- Redefining CyberSecuritySean Martin

- Networth and Chill with Your Rich BFFVivian Tu

- Maxwell Leadership Executive PodcastJohn Maxwell

- Mark My Words PodcastMark Homer

- Ruby RoguesCharles M Wood

- EGO NetCastMartin Lindeskog

- The Pathless Path with Paul MillerdPaul Millerd