Model inspection and interpretation at Seldon

- Af

- Episode

- 48

- Published

- 17. jun. 2019

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 48 of 349

- Længde

- 43M

- Sprog

- Engelsk

- Format

- Kategori

- Fakta

Interpreting complicated models is a hot topic. How can we trust and manage AI models that we can’t explain? In this episode, Janis Klaise, a data scientist with Seldon, joins us to talk about model interpretation and Seldon’s new open source project called Alibi. Janis also gives some of his thoughts on production ML/AI and how Seldon addresses related problems.

Sponsors:

DigitalOcean • – Check out DigitalOcean’s dedicated vCPU Droplets with dedicated vCPU threads. • Get started for free with a $50 credit. Learn more at do.co/changelog • . DataEngPodcast • – A podcast about data engineering and modern data infrastructure. Fastly • – Our bandwidth partner. • Fastly powers fast, secure, and scalable digital experiences. Move beyond your content delivery network to their powerful edge cloud platform. Learn more at fastly.com • .

Featuring:

• Janis Klaise – GitHub • , LinkedIn • , X • Chris Benson – Website • , GitHub • , LinkedIn • , X • Daniel Whitenack – Website • , GitHub • , X Show Notes:

SeldonSeldon CoreAlibi Books

“The Foundation Series” by Isaac Asimov“Interpretable Machine Learning” by Christoph Molnar Upcoming Events:

• Register for upcoming webinars here • !

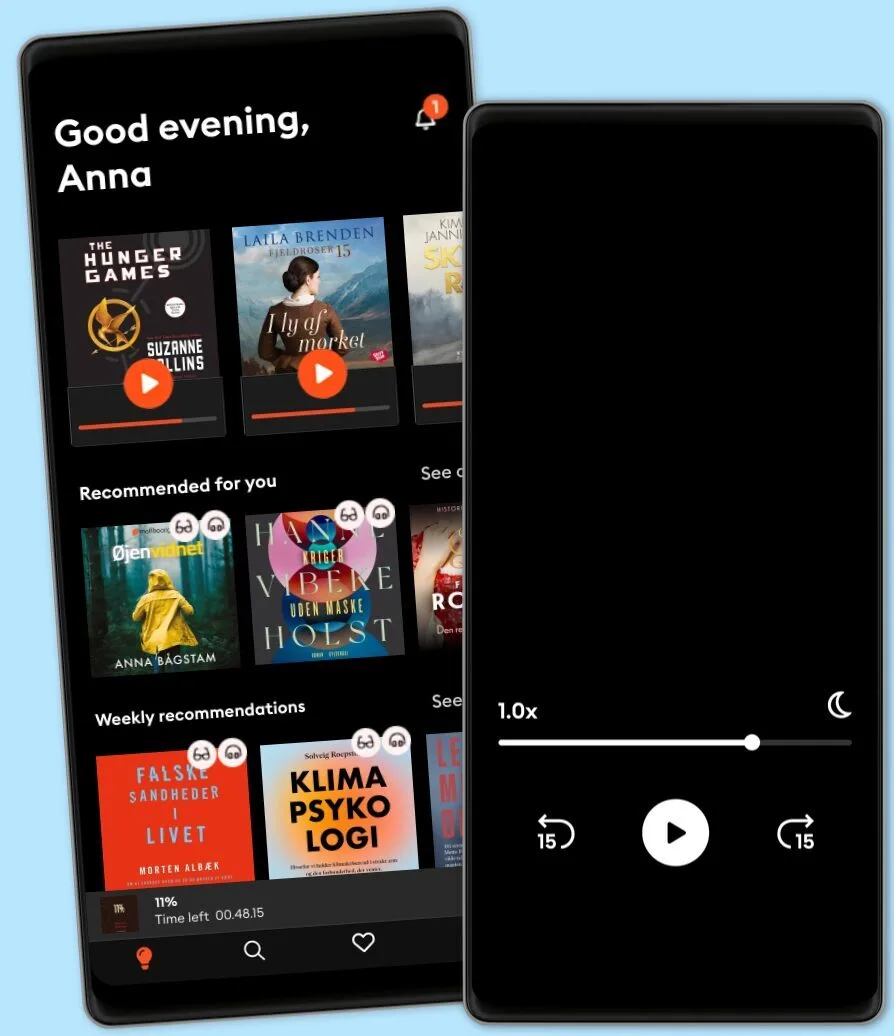

Other podcasts you might like ...

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- FC PopCornFilm Companion

- The Big StoryThe Quint

- DiskoteksbrandenAntonio de la Cruz

- Dragon gateEdith Söderström

- En amerikansk epidemiPatrick Stanelius

- En värld i brand: Andra världskriget och sanningenAnton Vretander

- FamiljenFrida Anund

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

- HelikopterpilotenVictoria Rinkous

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- FC PopCornFilm Companion

- The Big StoryThe Quint

- DiskoteksbrandenAntonio de la Cruz

- Dragon gateEdith Söderström

- En amerikansk epidemiPatrick Stanelius

- En värld i brand: Andra världskriget och sanningenAnton Vretander

- FamiljenFrida Anund

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

- HelikopterpilotenVictoria Rinkous