Software Fixing Hardware Problems

- Af

- Episode

- 12

- Published

- 4. apr. 2019

- Forlag

- 0 Anmeldelser

- 0

- Episode

- 12 of 328

- Længde

- 22M

- Sprog

- Engelsk

- Format

- Kategori

- Fakta

The full post (if you're not seeing links and images) can be found here (https://cisoseries.com/defense-in-depth-software-fixing-hardware-problems/) As we have seen with the Boeing 737 MAX crashes, when software tries to fix hardware flaws, it can turn deadly. What are the security implications? Thanks to this week’s podcast sponsor, Unbound Tech Check out this post and discussion for the basis of our conversation on this week’s episode co-hosted by me, David Spark (@dspark), the creator of CISO Series and Allan Alford (@AllanAlfordinTX), CISO at Mitel. Our guest for this episode Dan Glass (@djglass), former CISO for American Airlines.

Founded in 2014, Unbound Tech equips companies with the first pure-software solution to protect cryptographic keys, ensuring they never exist anywhere in complete form. By eliminating the burden of hardware solutions, keys can be distributed across any cloud, endpoint or server to offer a new paradigm for security, privacy and digital innovation.

On this episode of Defense in Depth, you'll learn: • The reason the Boeing 737 MAX airplane crashes are such a big story is airplanes don't usually crash because the airline industry is ingrained in a culture of safety. • Even though safety culture is predominant in the airline industry , there were safety features (e.g., training for the pilots on this new software correcting feature) that were optional for airlines to purchase. • Software is now in charge of everything. What company is not a digital company? We can't avoid the fact that we have software running our systems, even items that control our safety. • The software industry does not operate in a safety culture like the airline industry. • Is this just a data integrity issue? Is that the root cause of problems? How do we increase the integrity of data? • Can we override software when we believe it's making a bad decision? Allan brought up one example of a friend who tried to swerve out of his lane to avoid something in the road. The self-driving car forced him back in his lane and he hit the thing he was trying to avoid. Fortunately, it was just a bag, but what if it was a child? The self-correcting software didn't let him takeover and avoid the object in the road.

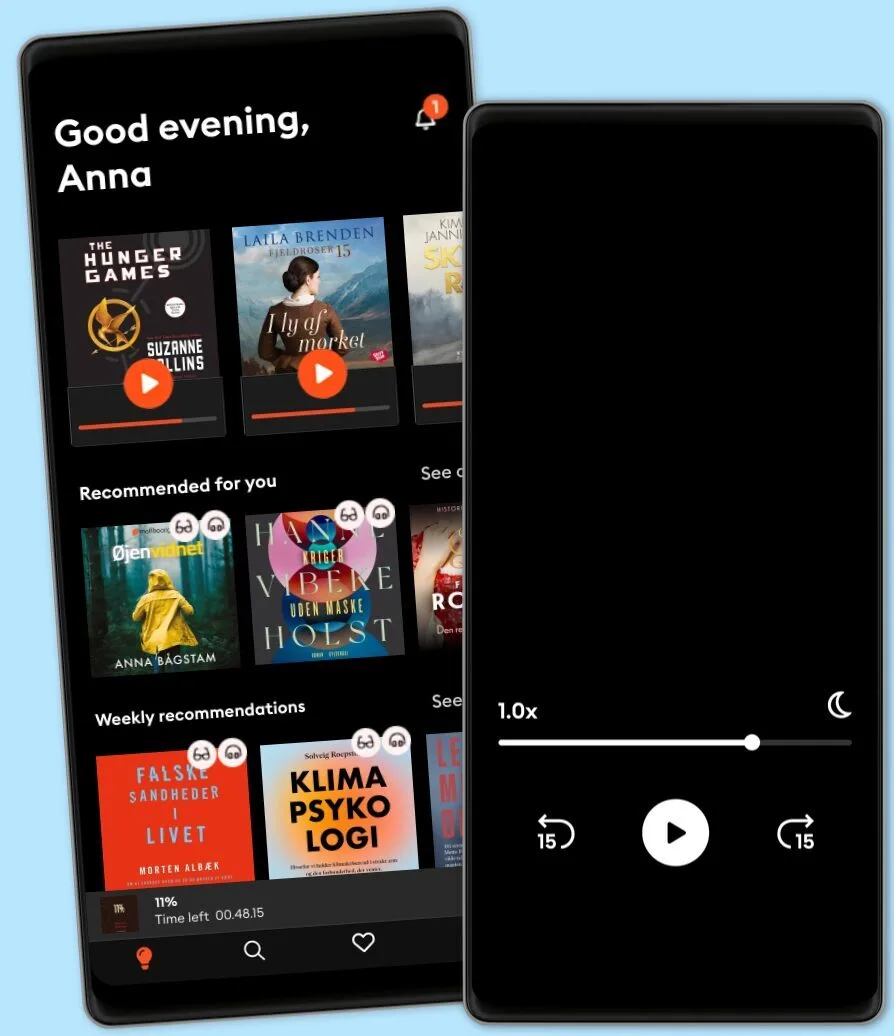

Lyt når som helst, hvor som helst

Nyd den ubegrænsede adgang til tusindvis af spændende e- og lydbøger - helt gratis

- Lyt og læs så meget du har lyst til

- Opdag et kæmpe bibliotek fyldt med fortællinger

- Eksklusive titler + Mofibo Originals

- Opsig når som helst

Other podcasts you might like ...

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- FC PopCornFilm Companion

- The Big StoryThe Quint

- DiskoteksbrandenAntonio de la Cruz

- Dragon gateEdith Söderström

- En amerikansk epidemiPatrick Stanelius

- En värld i brand: Andra världskriget och sanningenAnton Vretander

- FamiljenFrida Anund

- Försvunnen: Fallet ToveMaria Thulin

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

- M’usa, con l’apostrofo. Le donne di PicassoLetizia Bravi

- FC PopCornFilm Companion

- The Big StoryThe Quint

- DiskoteksbrandenAntonio de la Cruz

- Dragon gateEdith Söderström

- En amerikansk epidemiPatrick Stanelius

- En värld i brand: Andra världskriget och sanningenAnton Vretander

- FamiljenFrida Anund

- Försvunnen: Fallet ToveMaria Thulin

- Hagen-fallet: Spårlöst försvunnenAntonio de la Cruz

Dansk

Danmark